We now have, as you can see, the first image of the event horizon of a black hole, a place of frozen time and gravity gone mad. The black hole at the heart of M87 has a mass billions of times greater than the Sun and measures 40 billion kilometres across - more than twice the distance from here to the Voyager 1 spacecraft. Fall inside this heart of utmost darkness and you enter an inescapable realm where space and time become interchangeable. At the very centre lurks the singularity, a point of near-infinite density where the known laws of physics fail. Nothing but death awaits you here. All you'll hear are the trapped screams of scientists who went mad at the sheer baffling complexity of the whole thing.

|

| Apparently it would look like this. |

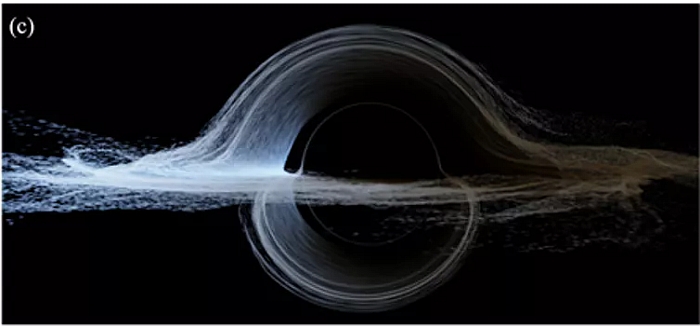

What's remarkable is just how close the real image looks to the predictions made years beforehand :

Clearly those relativity experts know what they're doing. The main difference seems to be that the real image has two bright spots while the simulation has just the one. My more expert colleagues (we were lucky enough to get a sneak peek at the real image) aren't sure why this is, so that might be interesting.

You might be wondering, though, why this image doesn't look as nice as previous models we've seen. Any why did they look at the black hole in M87 when the one in our own Milky Way is much closer ?

The second question can be partly answered by Red Dwarf :

Which is only slightly inaccurate. The black hole at the centre of the Milky Way is extremely inactive, meaning there's not much material falling in for it to devour. So it essentially is a case of black on black (or nearly so). The one in M87, however, is much more active, with huge jets of material extending well beyond the galaxy itself. That means that there's something for the hole itself to be silhouetted against.

|

| M87 and its jet. |

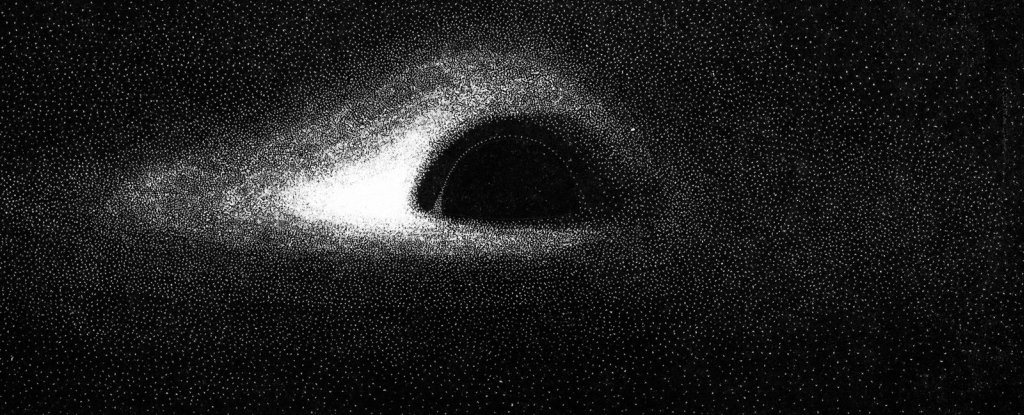

So why doesn't this first image look all that much like the more spectacular renderings we've been seeing ? For example the first attempt at visualisation was, despite the claims made about the movie Interstellar, done as far back as the 1970's :

With more modern simulations giving much more detailed predictions :

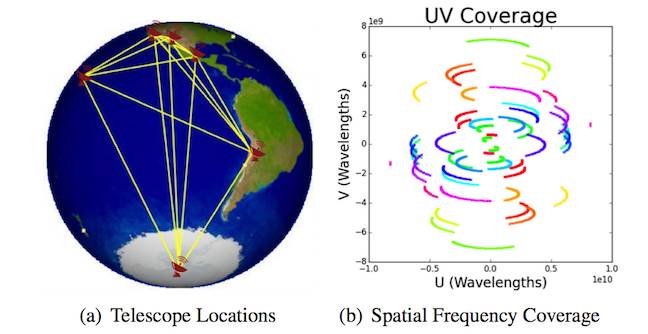

Those models look at optical wavelengths, but the Event Horizon Telescope operates over a broad range (continuum) sub-mm wavelengths. That means we can't see redshift effects causing one side to appear bluer than the other since we're not getting the precise frequency information. We couldn't get the same kind of optical colours anyway since it uses a very different wavelength of light. The reason it does this is that getting even the fuzzy image that we've got requires extreme resolution, equivalent, they say, to seeing a credit card on the Moon. For that we need an interferometer, a set of telescopes linked together to provide higher resolution.

Interferometry is something of a dark art in astronomy. The basic requirement is that you know the precise distance between each antenna. That means you know how much longer it takes light to reach one antenna than the other, and through some truly horrendous mathematics it's possible to reconstruct an image with much higher resolution than for a single reflector. The shorter the wavelength, the higher the accuracy you need, making this very difficult at optical wavelengths but easier in the sub-mm bands.

While a single-dish radio or sub-mm telescope works basically like a camera, measuring the brightness at many different points in space in order to construct an image, an interferometer is a much more subtle and vicious piece of work. The zeroth-order description is that you can combine telescopes and get an image of resolution equivalent to a gigantic single-dish telescope, one as big as the separation between the dishes (the baseline).

With a single dish, effectively you have a single aperture of finite size through which you receive light. It sounds odd to think of a reflecting surface as an opening, but that's basically what it is. There are no gaps in the single dish aperture, so wavelengths of all scales can enter unimpeded (unless they're so large they can't, in effect, fit through the hole). An interferometer is not like that. It's like comparing the hole blasted in a wall by a single cannonball to those made by some machine-gun fire.

The simplest way of describing the effect of this is that there's also a lower limit on the resolution of the telescope. The longer the baselines, the higher the resolution and the sharper your images get. But if you don't have shorter baselines as well, you won't be able to see anything that's diffuse on larger scales - that material gets resolved out; you could see the fine details but not the big stuff. A photon of wavelength 10cm is not going to have any problems fitting through a hole 1m across, but clearly trying to squeeze it through two holes each 1mm across but separated by 1m is a very different prospect. It's not quite a simple as this in reality, but it gets the idea across.

So, while the upper limit on resolution is given by the longest baseline, the lower limit is given by the shortest baseline. This means the overall quality of the image is much more complicated than just the maximum separation of the antennas : maximum resolution and sensitivity are nice, but they aren't the whole story.

You don't have to worry about this for single dish telescopes, because remember they're like having one great big hole instead of lots of little ones. Consequently, while the Very Large Array has a collecting area about one fifth as much as Arecibo, and a resolution at least four times better, its sensitivity to diffuse gas is almost a thousand times worse.

To some extent you can compensate for this. The most obvious way is to observe for longer and collect more photons. That helps, but because sensitivity in that regard scales with the square root of the integration time, if you want to double your sensitivity you have to observe for four times longer. That's bad enough for single dishes, but the situation is more complicated for interferometers. It's the number of different length baselines that determine your sensitivity to structures of different angular scales, not how many photons you collect. These are limits you can never quite overcome.

You can make things better though, because while you're observing the world turns. What that means is that the configuration of the antennas changes from the point of view of the target. The longer you observe, the greater the change, and the more of the "uv plane" you fill in. That means you get much more even sensitivity to information on different scales, getting you a cleaner and more accurate image. It's a bit like looking through a sheet of cardboard full of holes - the more you rotate it, the more information you get about what's behind.

maintenance requirements - and also the not inconsiderable problem of weather concerns, which have to be suitable at every location at the same time. That's why getting this image took so long.

|

| EHT uv tracks. |

The crucial thing about interferometers is that unlike single dishes, they do not make direct measurements of their targets. They measure incoming light intensity, but that's not the same thing. Instead, the image has to be reconstructed from the data - and it doesn't give you a unique solution. For example, you can choose to weight the contributions form the longer baselines more strongly and get higher resolution, or prefer the shorter baselines and get better sensitivity - both are valid. There are of course limits to what you can legitimately do with the data, but a lot of choices are subjective and it's often said to be more of an art than a science. It's a far more difficult procedure than using a single dish - there is indeed no such thing as a free lunch.

That's where the "looking through holes" analogy breaks down. If you were looking through holes in a wall, it wouldn't matter if you looked through them one at a time and combined your measurements later. With interferometers it's crucial that you get the same data at the same time for every antenna - you can't just bang your data together and get a nice image out. Again, you get a reconstruction, not a measurement.

The good news is that means the image can only get better with time. The more uv coverage they have, the better the spatial sampling and the more uniform the sensitivity will be to different scales, and the closer the image will get to those detailed simulations. The bad news is that we'll all have to be patient. The interferometry behind the EHT is delivering astonishing results, but there is, as always, a high price to pay for that.

No comments:

Post a Comment