I'm not a professional statistician, so I don't claim to understand the nuances of every kind of statistical test. Nevertheless using certain tests is an unavoidable part of my work, and I do try and think about what they really mean. I tend to agree with the article below.

Let’s be clear about what must stop: we should never conclude there is ‘no difference’ or ‘no association’ just because a P value is larger than a threshold such as 0.05 or, equivalently, because a confidence interval includes zero. Neither should we conclude that two studies conflict because one had a statistically significant result and the other did not. These errors waste research efforts and misinform policy decisions.

We are not calling for a ban on P values. Nor are we saying they cannot be used as a decision criterion in certain specialized applications (such as determining whether a manufacturing process meets some quality-control standard). And we are also not advocating for an anything-goes situation, in which weak evidence suddenly becomes credible. Rather, and in line with many others over the decades, we are calling for a stop to the use of P values in the conventional, dichotomous way — to decide whether a result refutes or supports a scientific hypothesis.

Basically they are saying that the term "statistical significance" should not be used in the binary way it currently is. Significance is a matter of degree, and there simply isn't a hard-and-fast threshold to it. There is no single number you can use to decide if variables are or are not connected to each other. I emphasised the words, "just because" above because it's important to remember that there

are ways to

irrefutably demonstrate a causal connection - just not by P-values alone.

We must learn to embrace uncertainty. One practical way to do so is to rename confidence intervals as ‘compatibility intervals’ and interpret them in a way that avoids overconfidence... singling out one particular value (such as the null value) in the interval as ‘shown’ makes no sense.

When talking about compatibility intervals, bear in mind four things. First, just because the interval gives the values most compatible with the data, given the assumptions, it doesn’t mean values outside it are incompatible; they are just less compatible. Second, not all values inside are equally compatible with the data, given the assumptions. Third, like the 0.05 threshold from which it came, the default 95% used to compute intervals is itself an arbitrary convention. Last, and most important of all, be humble: compatibility assessments hinge on the correctness of the statistical assumptions used to compute the interval. In practice, these assumptions are at best subject to considerable uncertainty.

What will retiring statistical significance look like? We hope that methods sections and data tabulation will be more detailed and nuanced. Authors will emphasize their estimates and the uncertainty in them — for example, by explicitly discussing the lower and upper limits of their intervals. They will not rely on significance tests. Decisions to interpret or to publish results will not be based on statistical thresholds. People will spend less time with statistical software, and more time thinking.

This is a sentiment I fully endorse. Whether it will really work in practice or if people do ultimately have to make binary decisions, however, is something I reserve judgement on.

I've previously shared some thoughts on understanding the very basics of statistical methodologies

here, albeit from a moral rather than mathematical perspective. In light of the article, let me very briefly offer a few more quantitative experiences of my own here. Some of them can seem obvious, but when you're working on data in anger, they can be anything but. Which is exactly how science works, after all.

-1) Correlation doesn't equal causation

I'm assuming you know this already so I don't have to go over it.

0) Beware tail-end effects

The more events you have, the greater the number of weird events you'll have from chance alone. If you have a million events, it shouldn't surprise you to see a million-to-one event actually happening.

1) Being statistically significant is not necessarily evidence for your hypothesis

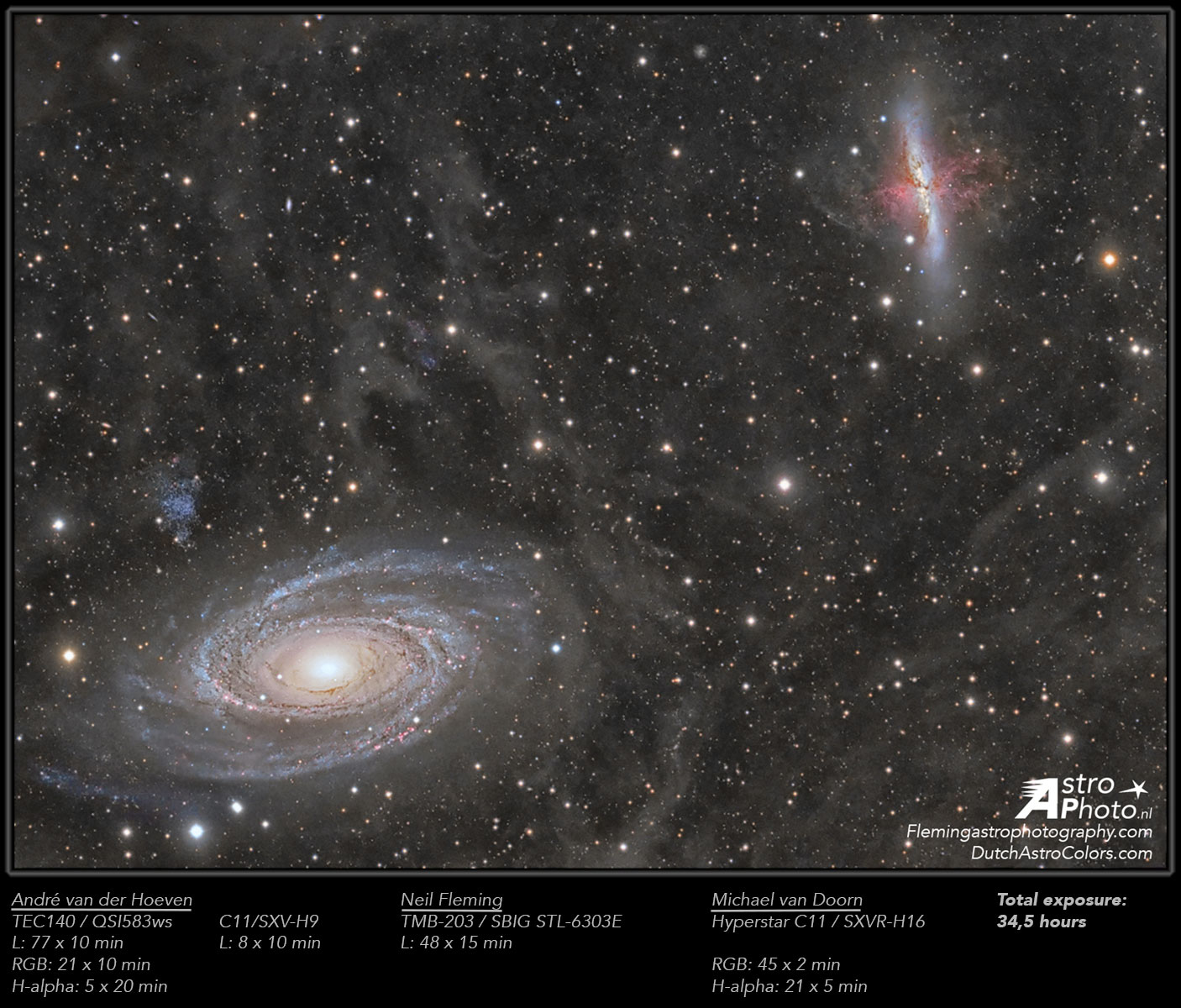

This is, as far as I can tell, the principle sin of those advocating that satellite galaxies are commonly arranged in

planar structures. What their tests are actually measuring is the probability that such an arrangement could occur by random chance,

not whether it's compatible with being in a plane. That's a rather specific case of using wholly the wrong sort of test though. More generally, even if you do use the "correct" test, that doesn't necessarily rule out other interpretations. Showing that the data is compatible with your hypothesis does not mean that other explanations won't do equally well or better even at the level of fine details - my favourite example can be found

here.

In essence, if a number is quoted demonstrating significance, the two main questions to ask are :

1) Does this number relate to how unlikely the event is to have occurred randomly, or does it directly measure compatibility with a proposed hypothesis ?

2) Have other hypotheses been rendered less likely as a result of this ?

You can call your result

evidence in favour of your hypothesis

if and only if it shows that your hypothesis is in better agreement with the data than alternatives. Otherwise you've probably only demonstrated consistency or compatibility, which is necessary but not sufficient.

2) You're all unique, just like everyone else

There an essentially infinite number of ways of arranging the hairs on your head, so the chance that any two people will have identical configurations is basically nil. But often such precision is insane, because most of those configurations will look extremely similar to each other. So if you want to work out the chance of people having a similar overall distribution of hairs, you

don't need to look at individual follicle placement. What you need to think about is what salient parameter is relevant to what you're studying, e.g. median follicle density, which will be very similar from person to person. You may or may not be interested in how the density varies from region to region on individual heads, and if so you won't be able to just measure global properties like full head size and total follicle count (see point 5).

3) Everyone is weird

Everyone is not only unique, but they are also

statistically unusual. This is known as the

flaw of averages : the counter-intuitive observation that few people indeed are close to the average even on a small number of parameters. The more parameters you insist on for your average person, the fewer such people you'll find. There is, quite literally, no such thing as an ordinary man (as

Doctor Who once put it).

Another similar factor cited against planes of galaxies studies is the

look elsewhere effect, which is in some sense a variation of the flaw of averages. Suppose you flick paint at a wall. You could compare different configurations by measuring how much paint splatter there is, but this would miss subtleties like the pattern the paint makes. You might find, for instance, that because your flicking motion has systematic characteristics, you could get linear features more often than you expect by chance.

What the "look elsewhere" effect says is that you should compare how frequently

different types of unlikely features crop up. To take a silly example, it's fantastically unlikely that by random chance you'd get a paint splatter that looks like a smiley face. And it's equally unlikely that you'd get some other coherent structure instead, like a star or a triangle or whatever. But collectively, the chance that you get

any kind of recognisable pattern is much,

much greater than the chance you'd get any one specific pattern. Of course, how specific the parameters have to be depends on what you're interested in : if you keep getting smiley faces then something genuinely strange is going on.

4) Probabilities are not necessarily independent

This is a lesson from high school mathematics but even some professional astronomers don't seem to get it so it's worth including here. If you roll a fair dice, the chance you get a six is always, always,

always one in six. So the chance of rolling two sixes in a row is simply 1/6 * 1/6, i.e. 1 in 36. There are 36 different outcomes and only one way of rolling two sixes. You can multiply probabilities like this because the events are completely independent of each other - the dice has no memory of what it did previously.

If you have more complex systems, then it's true that

if you expect the events to be independent, you can multiply the probabilities in the same way. But remember point 1 :

your hypothesis is not necessarily the

only hypothesis. The events may be connected in a completely different way to how you expect (e.g. a

common cause you hadn't considered) so you can't, for example, infer that there is a global problem from a very small sample of weird systems if there's any connection between them.

5) You can't quantify everything (at least not easily)

Measuring the global properties of systems like hair and paint is easy. But if you want to look for patterns, that can be much harder. Global properties like standard deviation, for example, tell you nothing whatsoever about arrangements.

Imagine you had a set of one hundred hairs and arranged them all in height order. To a casual visual inspection, a pattern would be obvious. But the standard deviation of heights doesn't depend on the ordering or position in the slightest, so it can often be far easier to spot such trends by a simple visual inspection. At the same time, visual inspection doesn't provide you with the quantitative estimates that are often very valuable (for a visual example see

this recent post). The take-home message is to look at and measure your data in as many different ways as you can, but take heed of the next point...

6) Beware of P-hacking

A classic approach to data analysis is to throw everything against a wall and see what sticks. That is, you can plot everything against everything and look for "significant" trends. This is an entirely legitimate approach, but point 0 applies to trends as well as individual events. Hence, for example, the infamous

spurious correlations website we all know and love.

There's nothing wrong in principle with trying to find trends, as long as you remember they

aren't necessarily physically meaningful. Indeed, a correlation can even appear from pure randomness if the

data range is limited (see comments). So you should probably start by searching for trends where a physical connection is obvious, and if you do find one which is more unexpected, compare different data sets to see if it's still present. If it's not present in all, or only emerges from the whole, it may well be spurious.

While visual inspection has its limitations, it's damn good at spotting patterns : so good it's much more prone to false positives than missing real trends. So as a rule of thumb, if you can see a pattern by eye, go do some statistical measurements to try and see how significant it is. If you can't see a pattern by eye at all, don't bother trying to extract any through statistical jiggery-pokery, or at the very least not by reducing complex data to a single number.

7) Randomness is not always multi-dimensional

If you have a random distribution of points at one moment, and then move them all a small amount in random directions, you'll end up with another random distribution of points. But... their positions will not be random with respect to their previous positions ! I learned this

the hard way why trying to approximate the effects of a random-walk simulation with a parametric approach.

8) Causation doesn't always equal correlation

Wait, shouldn't that be the other way around ? Nope. We all know (hopefully) that two variables can be correlated for reasons other than a causal connection, e.g. a common root cause. Somewhat less commonly appreciated is that a causal connection does not always lead to a correlation, thus making any statistical parameter useless. This can happen if the dependency is weak and/or there are other, possibly stronger influences as well. Disentangling the trend from the

highly scattered data can be difficult, but that doesn't mean it isn't there.

EDIT : I recently learned that this is known as Simpson's Paradox, and there's a fantastic illustration of it

here.

9) Quantity has a quality all of its own

Suppose you have fifty archers shooting at fifty targets. One of them is a professional and the rest have never held a bow before in their lives. Who's more likely to hit the bullseye first ? This is closely related to the last point (see the same link for details) but this point is distinctly different enough that it's worth including separately.

The answer is that the professional is much more likely to hit the bullseye first when compared to any other

individual archer. But if you compare them to the whole of the rest of the group, the tables could turn (depending one exactly how good the professional is and how incompetent everyone else is). For every shot the professional makes, the rest have forty nine chances to succeed. So the professional may appear to be outclassed by an amateur, even though this isn't really the case.

Investigating the role of a particular variable can therefore be more complicated than you might expect - using realistic distributions of abilities or properties can sometimes mask what's really going on. That the most extreme outcome is found in an apparently mediocre group can simply result from the size of the group as long as there's an element of chance at work. Having more archers may be better than having no archers at all, but if two sides are equal in number but differ only in skill, the skilled ones will win every time. But exactly how many crappy archers you need to beat one good one... well, that depends on the precise skill and numbers involved.

Some of these seem obvious but only after the fact. Beforehand they can be bloody perplexing. Statistics are easy to mess up because there are so many competing factors at work and this way of thinking does not come naturally. Everyone makes mistakes in this. This does not mean all statistics are useless or they are always abused to show whatever the author's want. Rather, a better message is that we should, as always, strive to find something that would overturn our findings - not simply pronounce inflexible proof or disproof based on a single number.

When was the last time you heard a seminar speaker claim there was 'no difference' between two groups because the difference was 'statistically non-significant'? If your experience matches ours, there's a good chance that this happened at the last talk you attended.