It's time for another round of evaluating whether ChatGPT is actually helpful for astronomical research.

My previous experiments can be found here, here, and here. The first two links looked at how well ChatGPT and Bing performed when analysing papers I myself know very well, with the upshot being an extreme case of hit-and-miss : Occasional flashes of genuine brilliance wrapped in large doses of mediocrity and sprinkled with total rubbish, to quote myself. All conversations had at least one serious flaw (though arguably in one case, which was factually and scientifically perfect but had crippling format errors).

The third link tested ChatGPT's vision analysis by trying to get it to do source extraction, which was a flat-out failure. Fortunately there have been other tests on this which show it does pretty badly in more typical situations as well, so I'm not going to bother redoing this.

With the release of ChatGPT-5, however, I do want to redo the analysis of papers. If I can have ChatGPT give me reliable scientific assessments of papers, that's potentially a big help in a number of ways, at the very least in determining if something is going to be worth my time to read in full. For this one I picked a new selection of papers as my last tests were a couple of years ago, and I can't claim I remember all their details as well as I did.

Because all the papers cover different topics, there isn't really a good way to standardise the queries. So these tests are designed to mimic how I'd use it in anger, beginning with a standardised query but then allowing more free-ranging, exploratory queries. There's no need for any great numerical precision here, but if I can establish even roughly how often GPT-5 produces a result which is catastrophically wrong or useless, that's useful information.

I began each discussion with a fairly broad request :

I'd like a short summary of the paper's major findings, an evaluation of its scientific importance and implications, what you think the major weaknesses (if any) might be and how they could be addressed. I might then ask you more detailed, specific questions. Accuracy is paramount here, so please draw your information directly from the paper whenever possible – specify your sources if you need to use another reference.

Later I modified this to stress I was interested in the strengths and weaknesses of the scientific interpretation as well as methodology, as GPT-5 seemed to get a little hung up on generic issues – number of sources, sensitivity, that sort of thing. I followed up the general summaries with specific questions tailored to each individual paper as to what they contained and where, this being a severe problem for earlier versions. At no point did I try to deliberately break it – I only tried to use it.

Below, you can find my summaries of the results of discussions about five papers together with links to all of the conversations.

0) To Mine Own Research Be True ?

But first, a couple of examples where I can't share the conversations because they involve current, potentially publishable research (I gave some initial comments already here). I decided to really start at the deep end with a query I've tried many times with ChatGPT previously and got very little out of it : to have it help with a current paper I'm writing, asking it to assess the merits and problems alike – essentially acting as a mock reviewer.

* Of which the management, like the rest of us, is generally sensible about such things. We all recognise the dangers of hallucinations, the usefulness and limitations of AI-generated code, etc. Nobody here is a fanboy nor of the anti-AI evangelical sort.

Previously I'd found it to be very disappointing at this kind of task. It tends to get hung up on minutia, not really addressing wider scientific points at all. For example, if you asked it for which bits should be cut, it might pick out the odd word or sentence or two, but it wouldn't say if a whole section is a digression from the main topic. It didn't think at scale, so to speak. It's hard to describe precisely but it felt like it has no understanding of the wider context at all.; it discussed details, not science. It wasn't that using it for evaluations was of no value whatsoever, but it was certainly questionable whether it was a productive use of one's time.

With the current paper I have in draft, ChatGPT-5's response was world's apart from its previous meagre offerings. It described itself as playing the role of a "constructively horrible" reviewer (its own choice of phrase) and it did that, I have to say, genuinely very well. Its tone was supportive but not sycophantic. It suggested highly pertinent scientific critiques, such as the discussion on the distance of a galaxy – which is crucial for the interpretation in this case – being too limited and alternatives being fully compatible with the data. It told me when I was being over-confident in phrasing, gave accurate indications of where I was overly-repetitive, and came up with perfectly sensible, plausible interpretations of the same data.

Even its numbers were, remarkably, actually accurate* (unlike others I haven't seen it make some classic errors in basic facts and numbers, including the number of specified letters even in fictional words; I tried reproducing some of these multiple times but couldn't). At least, that is, those I checked, but all those I checked were on the money – a far cry indeed from older versions ! Similarly, citations were all correct and relevant to its claims : none were total hallucinations. That is a big upgrade.

* ChatGPT itself claims that it does actual proper calculations whenever the result isn't obvious (like 2+2, for which training data is enough) or accuracy is especially important.

When I continued the discussion... it kept giving excellent, insightful analysis; previous versions tended to degenerate into incoherency and stupidity in long conversations. It wasn't always right – it made one major misunderstanding to one inquiry that I thought it should have avoided* – but it was right more than, say, 95% of the time, and its single significant misunderstanding was very easily corrected**. If it was good for bouncing ideas off before, now it's downright excellent.

* This wasn't a hallucination as it didn't fabricate anything, it just misunderstood the question.

** And how many conversations with real people feature at least one such difficulty ? Practically all of them in my experience.

The second unshareable test was to feed it my rejected ALMA proposal and (subsequently) the reviewer responses. Here too the tone of GPT-5 shines. It phrased things very carefully but without walking on eggshells, explaining what the reviewer's thought processes might have been and how to address them in the future without making me feel like I'd made some buggeringly stupid mistake. I asked it initially to guess how well the proposal would have been ranked and it said second quartile, borderline possibility for acceptance... praiseworthy and supportive, but not toadying, and not raising false hopes.

When I told it the actual results (lowest quartile, i.e. useless), it agreed that some of the comments were objectionable, but gave me clear, precise instructions as to how they could be countered. Those are things I would find extremely difficult to do on my own : I read some of the stupider claims ("the proposal flow feels a bit narrative"... FFS, it damn well should be narrative and I will die on this hill) and just want to punch the screen*, but GPT-5 gave me ways to address those concerns. It said things like, "you and I know that, but...".

* No, not really ! I just need to bitch about it to people. Misery loves company, and in a perverse bit of luck, nobody in our institute got any ALMA proposals accepted this year either.

It made me feel like these were solvable problems after all. For example, it suggested the rather subtle reframing of the proposal from detection experiment (which ALMA disfavours) to hypothesis testing (which is standard scientific practise that nobody can object to). This is really, really good stuff, and the insight into what the reviewers might have been thinking, or not understanding, made me look at the comments in a much more upbeat light. Again, it had one misunderstanding about a question, but again this was easily clarified and it responded perfectly on the second attempt.

On to the papers !

1) The Blob(s)

This paper is one of the most interesting I've read in recent years, concerning the discovery of strange stellar structures in Virgo they attribute to being ram pressure dwarfs. Initially I tried to feed it the paper by providing a URL link, but this didn't work. As I found out with the second paper, trying to do it this way is a simply mistake : in this and this alone does GPT-5 consistently hallucinate. That is, it claims it's done things which it hasn't done, reporting wrong information and randomly giving failure messages.

Not a great start, but it gets better. When a document is uploaded, hallucinations aren't quite eliminated, but good lord they're massively reduced compared to previous versions. It's weird that its more general web search capabilities appear rather impressive, but give it a direct link and it falls over like a crippled donkey. You can't have everything, I guess.

Anyway, you can read my full discussion with ChatGPT here. In brief :

- Summary : Factually flawless. All quoted figures and statements are correct. It chose these in a sensible way to give a concise summary of the most important points. Both scientific strengths and weaknesses are entirely sensible, though the latter are a little bland and generic (improve sensitivity and sample size, rather than suggesting alternative interpretations).

- Discussion : When pressed more directly for alternative interpretations, it gave sensible suggestions, pointing out pertinent problems with the methodology and data that allow for this.

- Specific inquiries : I asked it about the AGES clouds that I know are mentioned in this paper (I discovered them) and here I encountered the only real hallucination in all the tests. It named three different AGES clouds that are indeed noteworthy because they're optically dim and dark ! These are not mentioned in this paper at all. When I asked it to check again more carefully, it reported the correct clouds which the authors refer to. When I asked it about things I knew the paper didn't discuss, it correctly reported that the paper didn't discuss this.

- Overall : Excellent, once you accept the need to upload the document. Possibly the hallucination might have been a holdover from that previous attempt to provide the URL, and in my subsequent discussions I emphasised more strongly the need for accuracy and to distinguish what the paper contained from GPT-5's own inferences. This seems to have done the trick. Even with these initial hiccups, however, the quality of the scientific discussions was very high. It felt like talking with someone who genuinely knew what the hell they were talking about.

2) The Smudge

This one is about finding a galaxy so faint the authors detected it by looking for its globular clusters. They also find some very diffuse emission in between them, which is pretty strong confirmation that it's indeed a galaxy of sorts.

At this point I hadn't learned my lesson. Giving ChatGPT a link caused it to hallucinate in a sporadic, unpredictable way. It managed to get some things spot on but randomly claimed it couldn't access the paper at all, and invented content that wasn't present in the paper. Worse, it basically lied about its own failures.

You can read my initial discussion here, but frustrated by these problems, I began in a second thread with an uploaded document here. That one, I'm pleased to say, had no such issues.

- Summary : Again, flawless. A little bland, perhaps, but that's what I wanted (I haven't tried asking it for something more sarcastic). The content was researcher level rather than general public but again I didn't ask for outreach content. It correctly highlighted possible flaws like the inferred high dark matter content being highly uncertain due to an extremely large extrapolation from a relatively novel method.

- Discussion : In the hallucinatory case, it actually came up with some very sensible ideas even though these weren't in the paper. For example, I asked it about the environment of the galaxy and it gave some plausible suggestions on how this could have contributed to the object's formation – the problem was that none of this was in the paper as it claimed. Still, the discussion on this – even when I pushed it to ideas that are very new in the literature – was absolutely up to scratch. When I suggested one of its ideas might be incorrect, it clarified what it meant without changing the fundamental basis of its scenario in a way that convinced me it was at least plausible : this was indeed a true clarification, not a goalpost-shifting modification. It gave a detailed, sensible discussion of how tidal stripping can preferentially affect different components of a galaxy, something which is hardly a trivial topic.

- Specific inquiries : When using the uploaded document, this was perfect. Numbers were correct. It reported correctly both when things were and weren't present in the article, with no hallucinations of any kind. It expanded on my inquiries into more general territory very clearly and concisely.

- Overall : Great stuff. Once again, it felt like a discussion with a knowledgeable colleague who could both explain specific details but also the general techniques used. Qualitatively and quantitatively accurate, with an excellent discussion about the wider implications.

3) ALFALFA Dark Galaxies

My rather brief summary is

here. This is the discovery of 140-odd dark galaxy candidates in archival ALFALFA HI data. The ChatGPT discussion is

here. This time I went straight to file upload and had no issues with hallucinations whatsoever.

- Summary : Once again, flawless. Maybe a little bland and generic with regard to other interpretations, but it picked out the major alternative hypothesis correctly. And in this case, nobody else has come up with any other better ideas, so I wouldn't expect it to suggest anything radical without explicitly prompting it to.

- Discussion : It correctly understood my concern about whether the dynamical mass estimates are correct and gave a perfect description of the issue. This wasn't a simple case of "did they use the equation correctly" but a contextual "was this the correct equation to be using and were the assumptions correct" case, relating not just to individual objects but also their environment. Productive and insightful.

- Specific inquiries : Again flawless, not claiming the authors said anything they didn't or claiming they didn't say anything they did. Numbers and equations used were reported correctly.

- Overall/other: Superb. I decided to finish by asking a more social question – how come ALFALFA have been so cagey about the "dark galaxy" term in the past (they use the god-awful "almost darks", which I loathe) but here at least one team member is on board with it ? It came back with answers which were both sociologically (a conservative culture in the past, a change of team here) and scientifically (deeper optical data with more robust constraints) sensible ideas. It also ended with the memorable phrase, "[the authors are] happy to take the “dark galaxy” plunge — but with the word “candidate” as a fig leaf of scientific prudence."

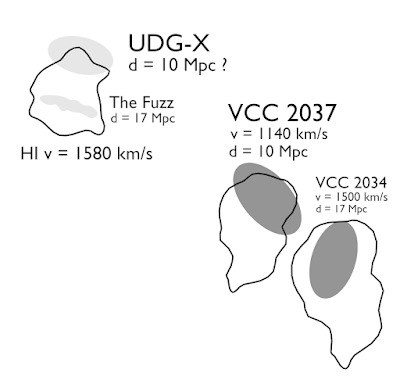

4) The VCC 2034 System

This is a case of a small fuzzy patch of stars near some larger galaxies, possibly with a giant HI stream, which has proven remarkably hard to explain. The latest paper, which I summarise

here, discounts the possibility that it formed from the long stream as it apparently doesn't exist, but (unusually) doesn't figure out an alternative scenario either. The ChatGPT discussion is

here.

- Summary : Factually perfect, though it didn't directly include that the origin of the object was unknown. Arguably "challenges simple ram-pressure stripping scenarios and suggests either an intergalactic or pre-cluster origin" implies this, but I'd have preferred it to state it more directly. Nevertheless, the most crucial point that previous suggestions don't really hold up came through very clearly.

- Discussion : Very good, but not perfect. While it didn't get anything wrong, it missed out the claims in the paper against the idea of ram pressure dwarfs more generally (about the main target object of the study it was perfect). With some more direct prompting it did eventually find this, and the ensuing discussion was productive, pointing out some aspects of this I hadn't considered. I'm not entirely convinced this was correct, but no more than I doubt some of the claims made in the paper itself – PhD level hardly means above suspicion, after all. And the discussion on the dynamics of the object was extremely useful, with ChatGPT again raising some points from the paper I'd completely missed when I first read it; the discussion on the survival of such objects in relation to the intracluster medium was similarly helpful.

- Specific inquiries : Aside from the above miss, this was perfect. When I asked it to locate particular numbers and discuss their implications it did so, and likewise it correctly reported when the paper didn't comment on a topic I asked about.

- Overall : Not flawless, but damn good, and certainly useful. One other discussion point caused a minor trip-up. When I brought in a second paper (via upload) for comparison and mentioned my own work for context, it initially misinterpreted and appeared to ignore the paper. This was easily caught and fixed with a second prompt, and the results were again helpful. By no means was this hallucination – it felt more like it was getting carried away with itself.

5) An Ultra Diffuse Galaxy That Spins Too Slowly

This was a paper that I'd honestly forgot all about until I re-read my own summary. It concerns a UDG that initial observations indicated lacked dark matter entirely, but then another team came along and found that would be unsustainable and it was probably just an inclination angle measurement error. Then the original team came back with new observations and simulations, and they found it does have some dark matter after all – at a freakishly low concentration, but enough to stabilise it. The ChatGPT discussion is here.

- Summary : As usual this was on the money, bringing in all the key points of the paper and giving a solid scientific assessment and critique. Rather than dealing with trivialities like sample size or simulation resolution, it noted that maybe they'd need to account more for the effects of environment or using different physics for the effects of feedback on star formation.

- Discussion : As with the fourth paper, this was again excellent but not quite complete. It missed out one of my favourite* bits of speculation in the paper that this object could tell us something directly about the physical nature of dark matter. It did get this with direct prompting, but I had to be really explicit about it. To be fair, this is just one paragraph in the whole article, but reading between the lines I felt it was a point the author's really wanted to make. On the other hand, that's just my opinion and it certainly isn't the main point of the work.

- Specific inquiries : Yep, once again it delivered the goods. No inaccuracies. It reported the crucial points correctly and described the comparisons with previous works perfectly. Again, it didn't report any claims the authors didn't make,

- Overall : Excellent. I allowed myself to branch out to a wider discussion of the cold dark matter paradigm and it came back with some great papers I should check out regarding stability problems in MOND. It sort of back-peddled a little bit on discussions about the radial acceleration relation, but this was more a nuanced clarification than revising its claims : CDM gets RAR as a result of baryonic physics tuning, but it gets this for free as a result of tuning for other parameters rather than directly for RAR itself; MOND gets RAR as a main feature. If that's not a PhD level discussion then I don't know what is.

* More generally, it seems pretty good at picking up on the same stuff that I do, but it would be silly to expect 100% alignment.

Summary and Conclusions

On my other blogs I've gone on about the importance of thresholds. Well, we've crossed one. Even the more positive assessments of GPT-5 tend to label it as an incremental upgrade, but I violently disagree. I went back and checked my earlier discussion with GPT-4o about my ALMA proposal and confirmed that it was mainly spouting generic, useless crap... GPT-5 is a massive improvement. It discusses nuanced and niche scientific issues with a robust understanding of their broader context. In other threads I've found it fully capable of giving practical suggestions and calculations which I've found just work. Its citations are pertinent and exist.

This really does feel like a breakthrough moment. At first it was a cool tech demo, then it was a cool toy. Now it's an actually useful tool for everyday use – potentially an incredibly important one. Where people are coming from when they say it gets basic facts wrong I've honestly no idea. The review linked above says it gave a garbage response when fed a 160+ page document and was anything but PhD-level, but in my tests with typical length papers (generally 12-30 pages) I would absolutely and unequivocally call it PhD level. No question of it.

This is not to say it's perfect. For one thing, even though there's a GUI setting for this, it's very hard to get it to stop offering annoying follow-up suggestions it could do. This is why you'll see my chats with it sometimes ends with "and they all live happily ever after", because I had to put that in my custom instructions to give it an alternative ending (in one memorable case it came up with "one contour to rule them all and in the darkness bind them"*). Even then it doesn't always work. And it always delivers everything in bullet-point form : no doubt this can be altered, but I haven't tried... generally I don't hate this though.

* I really like the personality of GPT-5. It's generally clear and to the point, straightforward and easy to read, but with the occasional unexpected witticism that keeps things just a little more engaging.

Of course, it does still make mistakes. Misinterpretations of the questions appears to be the most common, but these are very easily spotted and fixed. Incompleteness seems to be less common but more serious, but I'd stress that expecting perfection from anything is extremely foolish. And actual hallucinations of the kind that still plagued GPT-4 are now nearly non-existent, provided you give it rigorous instructions.

So that's my first week with GPT-5, a glowing success and vastly better than I was expecting. Okay, people on reddit, I get that you missed the sycophantic ego-stroking personality of GPT-4, so whine about how your virtual friend has died all you want. But all these claims that it's got dumber, and has an IQ barely above that of a Republican voter... what the holy hell are you talking about ? That makes NO sense to me whatsoever.

Anyway I've put my money where my mouth is and subscribed to Plus Watch this space : in a month I'll report back on whether it's worth it.